But should you? That’s complicated.

Table of Contents

Last August, I told you that ChatGPT can’t grade your papers.

Now, in the spring of 2024, this is no longer true. Grading papers, one of an instructor’s most onerous and time-consuming tasks, can be automated and streamlined with GPT Builder (which is available with a paid OpenAI Plus subscription, currently $20/month).

In this post, I’m going to show you how to use OpenAI’s GPT Builder to build a chatbot that can help you grade your papers and how to test it out to see how well it works.

But then, because we all recall Jeff Goldblum’s second-most memorable line from Jurassic Park (no, not the one about life, uh; the could/should one), I want to talk about some of the implications and limitations of using a chatbot to grade your papers.

Previous posts in this series:

How to Use OpenAI’s GPT Builder: A Beginner’s Guide for Educators – a walkthrough of the basics of building a GPT chatbot

OpenAI’s GPT Builder for Educators: Introduction to the Bot Teaching Process – a deeper look at how ChatGPT learns and becomes more sophisticated over time, meaning that we can apply the same iterative teaching processes to creating chatbots that we use in the classroom

Building a Paper-Grading Chatbot

Teaching the Bot

Last year, when I tested ChatGPT-3.5 on grading student essays, I used the sample essays from the ACT test prep website as my baseline since they use a clear rubric and showcase a range of writing proficiency.

This time, I’ve built a paper-grading chatbot with GPT-4 and taught it to grade the ACT sample essays. I’ll walk through that process, and then we can assess how well it performs.

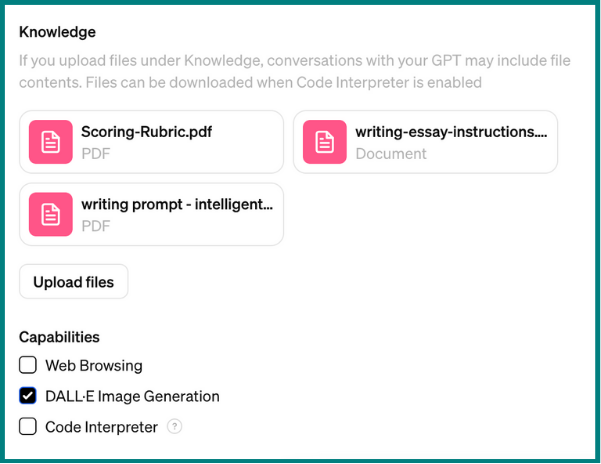

I began by uploading the essay writing instructions, sample prompt 1, and scoring rubric to the GPT’s knowledge base. I also disabled web browsing so that we know the GPT isn’t pulling its scores from the ACT website.

Then I told GPT builder: “Make an essay grader that scores essays based on the instructions, prompt, and rubric that have been uploaded to your knowledge base.”

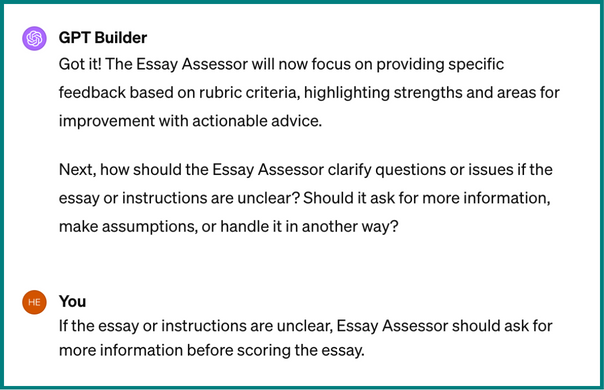

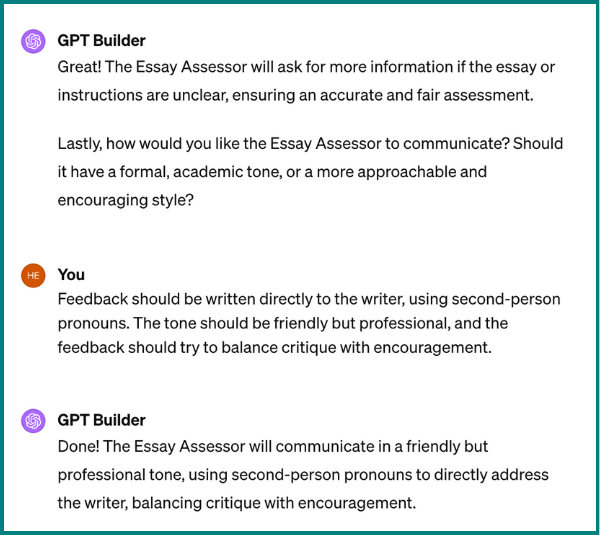

Great! said GPT Builder. I accepted its suggested name (“Essay Assessor”) and profile picture (a drawing of a red pen lying across a stack of papers), and then prompted the GPT builder with:

“Essays should be assessed according to the rubric criteria. Essay Assessor should give brief feedback for each criterion followed by a paragraph of global feedback. Feedback should identify areas of strength in the essay and how the writer can continue to develop and use those strengths, and areas for improvement and how the writer can develop and improve in those areas. The underlying purpose of the feedback is to encourage the writer to keep practicing and growing in the specific areas of strength/weakness identified.”

Got it! replied GPT Builder.

Testing and Results

After giving the GPT its instructions, I began testing it by having it grade the example essays on the ACT website. Some of the numeric scores it assigned to each essay were a point off from the scores given on the website, but it was at least internally consistent – when asked to score the same essay in multiple conversations, its scores and feedback were similar.

Given a rubric and the type of feedback it should give, the GPT identified how the writing was succeeding and how it could be improved. For example, here is the global feedback it provided for the “4” essay from the ACT website, which the GPT scored a 3:

Your essay thoughtfully addresses the topic of intelligent machines, highlighting important concerns about their impact on society and human interactions. Your main points are compelling and invite reflection. However, to elevate your essay further, consider refining your analysis to include a balanced consideration of opposing viewpoints and clarifying the logical connections between your examples and the essay’s thesis. Organizational improvements, such as clearer transitions and a more deliberate structure, would help in presenting a more compelling argument. Additionally, polishing the language and grammar will ensure that your ideas are communicated effectively. By addressing these areas, you can enhance the persuasiveness and impact of your writing. Keep exploring these complex themes with the same critical eye and commitment to depth.

When I asked it to provide specific examples of strengths and areas for improvement using direct quotes from the essay, it did well.

You can read the whole conversation here.

Overall, the GPT chatbot does a pretty good job of assigning consistent scores and providing objective feedback to student writing that not only comments on the essay in front of it but also provides direction for future writing.

I want to stress here that building a grading chatbot must involve a detailed, specific grading rubric – see the ACT writing test scoring rubric for an example of this. The use of rubrics in teaching writing is controversial (more on this in a moment), but there must be concrete criteria that the chatbot can evaluate the writing against. This is the only way to ensure consistency in how the chatbot assesses the writing.

So, can we use ChatGPT to grade papers? If you were evaluating student writing in a scenario where objectivity and consistency were a priority and in which there would be no further follow-up with the student on this piece of writing–for example, scoring writing tests–a GPT chatbot could be an effective tool for processing a lot of writing quickly.

But I’m not sure we should.

Yes, ChatGPT can grade and provide feedback on a lot of writing quickly, objectively, and clearly. But responding to student writing is about more than giving it a grade and identifying strengths and areas for improvement.

What Are We Doing When We Respond to Student Writing?

Given the choice between using a chatbot that can provide grades and feedback to a stack of essays in minutes or spending a whole weekend doing it yourself, it looks like an easy decision. But we risk losing sight of why we assign and respond to papers.

Erika L. Bass (2023) summarizes the general consensus in the field of composition pedagogy of the purpose of and strategy for responding to student writing:

Providing feedback on student writing is based on the concept of growth mindset […], which suggests skills can be grown and are not innate; that is, writers are grown, not born. Feedback can be a powerful tool for growing writers. For the writing process, this means feedback has the power to instill in students they can grow as writers, reinforcing the adage that everyone is a writer […]. When feedback is focused on correcting grammatical, typographical, and other sentence-level errors, students’ writing does not improve. … [Feedback is] more effective when it has more information (i.e., when feedback helps the student understand the mistake they made and why they made it); feedback that is simple reinforcement or punishment is not as effective. In other words, students find feedback to be most useful when it provides them with direction for strategizing revision.2

At first glance, ChatGPT seems like it can do this. It identifies and reinforces skills and explains how to improve deficits. But it cannot do this in dialogue with the writer. It can help the student

understand the mistake they made, but not why they made it. The latter requires developing a relationship between writer and instructor, developing a baseline understanding of their writing abilities and voice, and learning their strengths and needs.

ChatGPT cannot deliver writing assessment that is “dynamic and relational rather than static and standardized”3 or adapt its feedback based on students’ unique needs. Without a working relationship with the writer, ChatGPT can’t praise students for making improvements, taking risks, growing as writers; and it can’t identify how to encourage their growth.

And, ChatGPT cannot create an authentic human response to writing. In her essay “The View from Somewhere” (2007), Maja Wilson reminds us that reading and responding to a piece of writing is not an objective process, but an experiential one, a conversation between reader and writer. She writes,

How can teachers view writing assessment as a conversation? First, pay attention to what goes on in your mind as you read students’ writing, and articulate those reactions; give each student your experience of reading her or his paper. As students respond to your reactions, you might find that your interpretation and suggestions change. Be open to each writer’s purposes, experiences, and personality, using everything you know about that person and yourself as a reader.

ChatGPT is capable of many things, but it cannot tell students what it felt like to read their writing or nurture creative and original thinking.

Hybridizing the Grading Process

Of course, the reality is that there are usually more papers to grade than time to grade them thoughtfully. Perhaps the best solution is to grade papers and respond to student writing in partnership with ChatGPT–to have ChatGPT generate feedback and then to go in behind it and adapt the feedback to the student’s unique needs. This way ChatGPT could be used to speed up the process, but not to fully automate it.

This approach also corrects for other issues that may arise from using ChatGPT to assess student writing, namely bias and inclusivity. AI systems can perpetuate biases present in their training data. How can you ensure the grading chatbot is not inherently biased against certain dialects, writing styles, or cultural expressions? Does it adequately handle diverse perspectives and expressions found in student essays without marginalization? Some of this can be addressed through the use of clear rubric criteria, but ultimately, this must be mitigated by mediating the feedback through the instructor.

The best use of AI is to have a human on both sides of it – a human deciding what the input should be (creating grading criteria, crafting prompts) and then adapting the output (tailoring feedback, experiencing the piece of writing as a reader). A chatbot can provide a first round of feedback that explains to the student how their writing aligns to the assignment expectations, but it cannot relate to them as a human reader to a human writer.

The bottom line: Could you use ChatGPT to grade your papers? Yes. But should you? Not on its own, but as one step in the grading process, it can save you a lot of time.

Abi Bechtel

Abi Bechtel is a writer, educator, and ChatGPT enthusiast. They have an MFA in Creative Writing from the Northeast Ohio MFA program through the University of Akron, and they just think generative AI is neat.

Teresa Potter, M.Ed., specializes in strategic projects that drive measurable results while finding creative solutions. Her organization, T. Potter Instructional Design (TPID), partners with subject matter exports to make training development easy. TPID works with associations, nonprofits, and educational institutions across industries like healthcare, human services, leadership, and AI in education. Their goal is to empower experts to do more of what they do well through the capabilities of teaching and learning science.

- Currently, the essay prompt on the ACT website is about intelligent machines. “Automation is generally seen as a sign of progress, but what is lost when we replace humans with machines? Given the accelerating variety and prevalence of intelligent machines, it is worth examining the implications and meaning of their presence in our lives.” Coincidence??

- Bass, E. L. (2023). Using university–school partnerships to facilitate preservice teachers’ reading and responding to student writing. Journal of Adolescent & Adult Literacy, 66(6), 377–381. https://doi.org/10.1002/jaal.1283

- Beck, S.W., Jones, K.M., Storm, S.W., & Smith, H. (2020). Scaffolding students’ writing processes through dialogic assessment. Journal of Adolescent & Adult Literacy, 63(6), 651–660. https://doi.org/10.1002/jaal.1039