I tried using ChatGPT to grade papers. Here’s why it didn’t work.

When I was teaching freshman composition, I had a ritual for the day after students’ final portfolios were due. I would stare at the towering pile of essays on my desk – or, later, at the long list of file submissions in the course management system – and I would read this McSweeney’s piece to myself out loud: I Would Rather Do Anything Else Than Grade Your Final Papers by Robin Lee Mozer.

I would rather base jump off of the parking garage next to the student activity center or eat that entire sketchy tray of taco meat left over from last week’s student achievement luncheon that’s sitting in the department refrigerator or walk all the way from my house to the airport on my hands than grade your Final Papers.

I am sure there are some writing professors who enjoy grading essays, but I was never one of them. (As Mozer says, I would rather eat beef stroganoff.) If I could outsource this task to someone else and trust that they would be able to grade the papers with consistency and attention to detail and to hold students accountable to the standards established in class, I would certainly be tempted to hand over the whole stack. Of course, giving my students’ essays to another person to grade would be unethical and probably a FERPA violation, so I never did, but I sure thought about it.

But that was before ChatGPT came along. What about using ChatGPT? That’s not a person, and I would still be overseeing the grading. Could I run students’ papers through ChatGPT and let the AI do the heavy lifting?

To cut the suspense, I’ll jump ahead to the conclusion. After testing this out, I am sad to say: No, ChatGPT cannot grade your papers for you.

Let me explain how I tested this.

The Test: Part One, Establishing a Baseline

Because of privacy concerns, I did not want to try putting actual student writing into ChatGPT. Instead, I used the example essays from the ACT website. The ACT website provides thorough scoring criteria in addition to scored example essays, ranging from poor to excellent. This made the example essays perfect writing samples to put into ChatGPT and compare ChatGPT’s output against the ACT website’s scores.

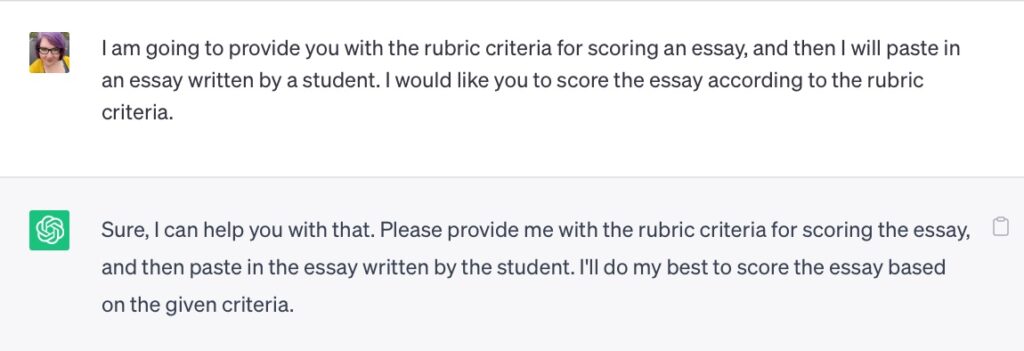

I began by copying and pasting in the entire scoring rubric, then the writing sample prompt, and finally, several essays, one by one. You can read the whole conversation here.

First, I pasted in two low-scoring essays from the ACT website. On a scale from 2 to 6, ChatGPT scored them both as 2s, the same as the website did.

Next, I entered the essays provided as an example of a level-5 essay and a level-6 essay. ChatGPT gave both essays a score of 4.

Finally, I asked ChatGPT to generate an essay in response to the prompt that would score a 6 based on the rubric criteria. It churned out an organized, thoughtful-sounding 6-paragraph essay.

“Now please provide a score breakdown of this essay per the rubric,” I told it.

“Certainly!” responded ChatGPT, and wrote up a score analysis of the essay

ChatGPT’s analysis: It gave its own supposedly level-6 essay – the essay that it created just one prompt earlier following instructions to create an essay that would earn a 6 – a 5.

Next, I entered another essay prompt – this one from the Kaplan test prep website – and another example essay and asked ChatGPT to score them based on the same rubric criteria. It also scored this essay as a 5, which looked consistent with the scored essays on the ACT website.

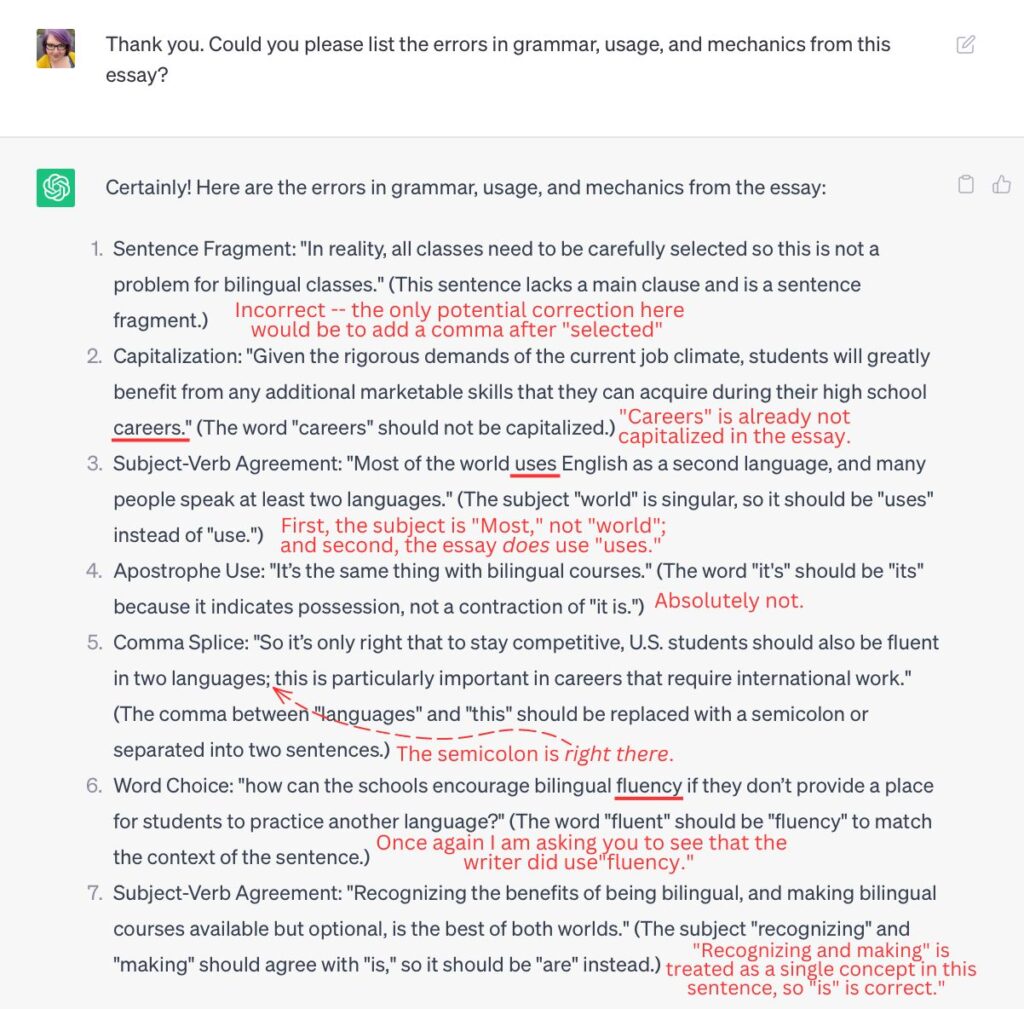

In the feedback on the Kaplan essay, ChatGPT noted, “There are only minor errors in grammar, usage, and mechanics, which do not significantly impact meaning.” I was surprised; I hadn’t spotted any errors when I skimmed the essay. Curious, I asked: Could you please list the errors in grammar, usage, and mechanics from this essay?

ChatGPT spat out a list of 7 errors in the essay, explaining what made each error incorrect and an example of how it could be corrected. But there was a problem: None of the errors were errors, and none of ChatGPT’s explanations were correct.

So far, ChatGPT is only moderately consistent at evaluating an essay against the rubric, and completely baffling at providing specific feedback on grammar and mechanics.

The Test: Part Two, Checking Reproducibility

Next, I started a new conversation with ChatGPT. (You can read the whole conversation here.) I began the same way, pasting in the ACT website’s rubric criteria and writing prompt. But then, instead of the example ACT essays, I pasted in the essay that ChatGPT wrote – the essay that it wrote after being prompted to write a 6, and then scored as a 5.

The result:

It gave its essay a 4.

The Test: Part Three, Once More Unto the Breach

I opened a new chat and again pasted in the rubric criteria and the prompt. (Here’s the full conversation.) Then I pasted in the level-5 writing sample from the ACT website, which ChatGPT had scored as a 4 in the first chat.

This time, it gave the essay a 3.

I tested the ACT website’s level-6 example, which ChatGPT previously also scored as a 4. This time, ChatGPT gave it a slightly higher score than the level-5 example, but still a 3.

The essay ChatGPT wrote earlier as an example of a 6? It also scored a 5.

Obviously, ChatGPT was struggling with consistently applying the rubric criteria. Could it be a problem of needing to calibrate ChatGPT’s assessment of what a level-6 essay would look like?

The Test: Part Four, Calibration

I started yet another conversation to test this. Once again, I pasted in the rubric and essay prompt. Then I dropped in the ACT level 2 and level 6 essays and told ChatGPT that these were examples of the lowest and highest scores so that it could compare additional essays against the rubric and against what constituted a top-scoring or low-scoring essay.

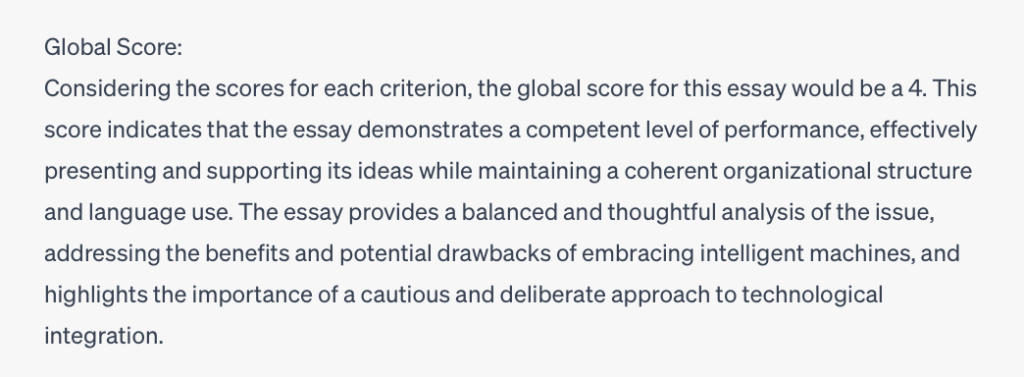

Then I asked it to score the ACT level 5, 4, and 3 essays. ChatGPT scored these as 4.5, 3.75, and 3.5, respectively, more closely aligned with how the ACT website rated them.

So I asked it to score the essay written by ChatGPT as an example of a 6, which you’ll recall ChatGPT elsewhere scored as a 5, a 4, and a 5.

Reader, this time, ChatGPT scored its own level-6 essay at a 3. So much for calibration being the problem.

Conclusion

So, can ChatGPT be used to grade student papers?

Nope. It is not advisable to use ChatGPT, or at least GPT-3.5 at its current capabilities 1, to grade student papers. It’s inconsistent, it finds errors where there are none, and it isn’t really doing analysis.

Remember, ChatGPT is not an analysis machine – it is a language machine. It is not built to critically assess papers and provide thoughtful feedback; it is built to produce language that sounds like thoughtful feedback.

We also haven’t even dug into the student privacy concerns; the Common Sense Privacy Program gives ChatGPT a 48% rating. I would strongly advise against putting students’ work into ChatGPT, certainly not with any identifying information attached.

How Else Can ChatGPT Be Used in the Grading Process?

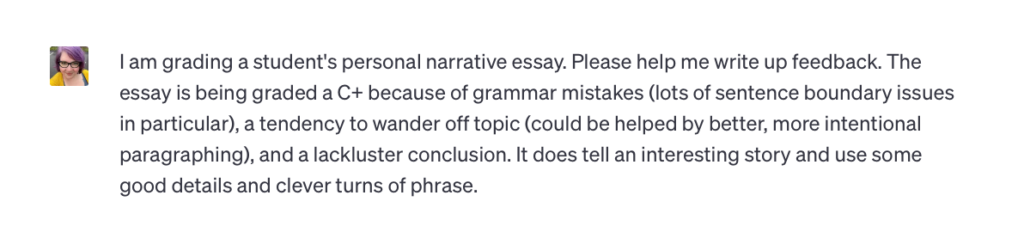

That doesn’t mean there’s no room to use ChatGPT to help you save time grading papers. One use for it is to write up coherent feedback. You can give ChatGPT a shorthand list of things you want to point out, and ChatGPT can turn that into sentences that you can give a student as feedback.

ChatGPT can also help create assignment sheets and grading rubrics, as I explain here: 10 Practical Ways to Use ChatGPT to Make Your Teaching Life Easier (Even If You’re Afraid of AI).

The bottom line is that when it comes to grading papers accurately and consistently, providing your students with targeted feedback that meets them where they are, and assessing your students’ growth as writers through the semester, you are irreplaceable.

Abi Bechtel is a writer, educator, and ChatGPT enthusiast. They have an MFA in Creative Writing from the Northeast Ohio MFA program through the University of Akron, and they just think generative AI is neat.

- I only tested this with GPT-3.5, which is the free version of ChatGPT. As of this writing, GPT-4, which OpenAI touts as being “great for tasks requiring creativity and advanced reasoning,” requires a ChatGPT Plus membership at $20/month. It’s possible GPT-4 could handle this task better; however, I suspect that the people who would like to relieve some of the burden of grading papers are not in the demographic of people who want to spend $20 a month on ChatGPT Plus.